Voice Recognition Technology vs. Graphic User Interface

Voice recognition technology, such as Siri and Alexa, are growing in popularity, leading many web design experts to wonder what voice could potentially do to the way users interface with voice user interface design. It comes with a caveat – will it overtake graphical user interface? With so many on-screen elements currently designed around text input, many wonder how much needs to change and how soon that change needs to take place. Voice user interface design has not yet displaced graphical user interface, but the answer to the question of whether one will overtake the other remains to be seen.

Will Voice Recognition Technology Overtake the Graphical User Interface?

While Alexa and Siri are fresh innovations, voice recognition technology has been around for a long time. Before the original “Star Trek” episodes featured the talking computer, Bell Laboratories invented Audrey in 1952. Audrey only understood digits when they were spoken by one voice at a time, but it’s the beginning of the modern voice technology.

Not long after, IBM debuted the Shoebox, a machine that could understand 16 English words. Inventors around the globe worked to develop and refine hardware that turned speech processing and voice technology into meaningful data, gradually developing systems that understood more vowels and consonants, eventually shaping them into words.

During the 1970s, funding from the U.S. Department of Defense led to the development of Harpy, a system that understood 1,011 words and used beam search to find sentence possibilities. New approaches in the 1980s led to systems that could predict words based on probability and established speech patterns.

Before Alexa and Siri, voice recognition technology entered homes in the form of a child’s toy. The Worlds of Wonder’s Julie Doll debuted in 1987, and it responded to input from children. While voice recognition technology has come a long way from the few sentences and songs Julie came with, humans have been developing speech processing devices since the beginning of the tech era.

In childhood, people develop speech years before they begin to recognize graphics. Near the end of life, adults who can no longer drive and have lost much of their dexterity are still able to talk. Speech remains the most intuitive and comfortable form of communication, so speech processing is crucial. As voice user interface replaces traditional UI, designers seek to tap into that mode of interaction.

What Else Hasn’t Changed

Voice recognition technology has evolved, but most aspects of interface remain the same as they have been since early computers. Text remains the primary means of communication on both desktop and mobile platforms. Users interact with on-screen elements using a keyboard, a mouse, gestures, or touches, but the devices themselves rely on user input.

Original conversations with computers involved the user typing in a textual command, hitting enter, and reading the computer’s response. Sometimes when the computer didn’t recognize the input, the communication resulted in user frustration. The user had to modify the input to find a format the computer recognized to continue the conversation. Over time, that communication became more streamlined as both humans and computers developed a more sophisticated understanding of how to communicate.

Designing for UX using voice recognition technology requires the skills designers already possess. When designing for voice, experts show users the actions they can take and streamline processes. Designing for conversation follows the same principles as designing for a visual interface, it just utilizes natural language.

Dark Predictions

Some experts say we’re moving toward a word of Zero UI, in which interactions no longer take place on screens. Xbox launched its first-generation Kinect in 2010 to use webcams as peripherals that sensed motion for gameplay. Amazon Echo relies solely on voice interaction. Other devices like the Nest thermostat avoid touchscreens and supply collaboration through voice control, artificial intelligence, and computer vision.

Rather than humans having to adapt to computers, speaking a language they recognize, Zero UI is about creating machines that respond to natural speech and movement.

Voice recognition technology currently involves the user providing input in the form of a question or a command. The device responds to one request at a time, and the user must clearly phrase it. Those who favor a Zero UI reality seek to move away from that to more natural interactions with no screens.

Alexa VP Responds

Al Lindsay, the Alexa vice president, says voice computing won’t completely replace the graphical user interface. He points out that several systems using Alexa have screens. The Echo Show and Spot and Fire TV rely on text input in conjunction with conversation for use.

Lindsay says the goal is to make AI like Alexa highly accessible, but that for many situations, GUIs are more efficient. For example, when users need to compare 10 different items, a visual is much easier to process than a verbal description. Sometimes users can see at a glance what would be difficult to convey through even lengthy conversation.

Evaluating Forms of Communication

So how should designers plan for the future? What website design best practices still hold true? Recognize what voice recognition technology mean for UI by analyzing the primary means of communication. Most human interaction is nonverbal. Here’s a breakdown of how senses absorb information.

- Humans process 83 percent of communication by sight.

- Eleven percent of what we understand comes through hearing.

- The other senses also contribute information, with smell accounting for 3 percent, touch another 2 percent, and 1 percent is communicated through taste.

Even when we’re having a verbal conversation, we absorb a large part of the verbal transaction through sight, so it’s not a matter of choosing one or the other. The most effective design incorporates text, images, and voice interfaces to help users effortlessly process complex information.

Additional Security Issues

Users didn’t have to worry about security or privacy with early GUI generations, but security became a top concern as capability and networking increased. Voice technology adds additional complications.

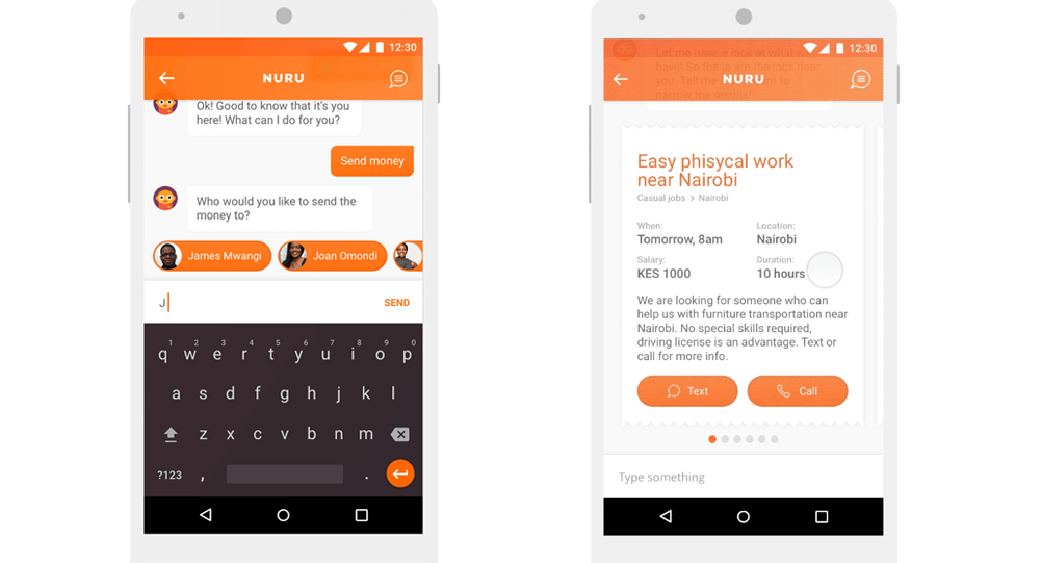

Voice recognition technology is most useful when it is always listening to input. Alarm systems keep families safe when they “learn” a family’s routines and voices to detect abnormalities that might indicate risk. Alexa is helpful because she’s always ready to order pizza or give weather updates or you can send money securely by giving a command to chatbots.

Source: Smashingmagazine

However, with voice-activated microphones in every room, on every device, it’s hard to find the line between usefulness and privacy concerns. When police investigated an Arkansas murder, they asked Amazon for data Alexa might have overheard.

UX design has always involved helping users feel not just supported, but safe. As voice technology continues to evolve, designers will have to adapt voice user interfaces to ensure their users are protected.

Voice User Interface Design Is Life-Changing

While the conversation has been around since the beginning, speech recognition is revolutionizing UX. Amazon Echo has already provided significant improvement for customers with mobility, cognitive, or visual impairment.

Source: Businessinsider

Users set alarms, access information, and send communications easily without having to take their attention from what they’re doing. Speech recognition will continue to make text-based apps more accessible to people with dyslexia and other reading disabilities.

Designers should continue to combine both voice and graphical user interface for an immersive experience. Add to it voice recognition technology software and use visual feedback to enhance each step of the interaction. Amazon’s Echo Dot offers a swirling blue light when users call Alexa’s name to signal the device is ready for input. Speech recognition is transforming the game.

The good news is that this it doesn’t have to be voice recognition technology vs graphical user interface. The marriage of these two can be transformative, leading to enhanced UX. When experts design each element with the user in mind, it becomes something much more than a human using their device. It transforms into an experience, a conversation, a deeply personal interaction that makes that use

Leave a Reply